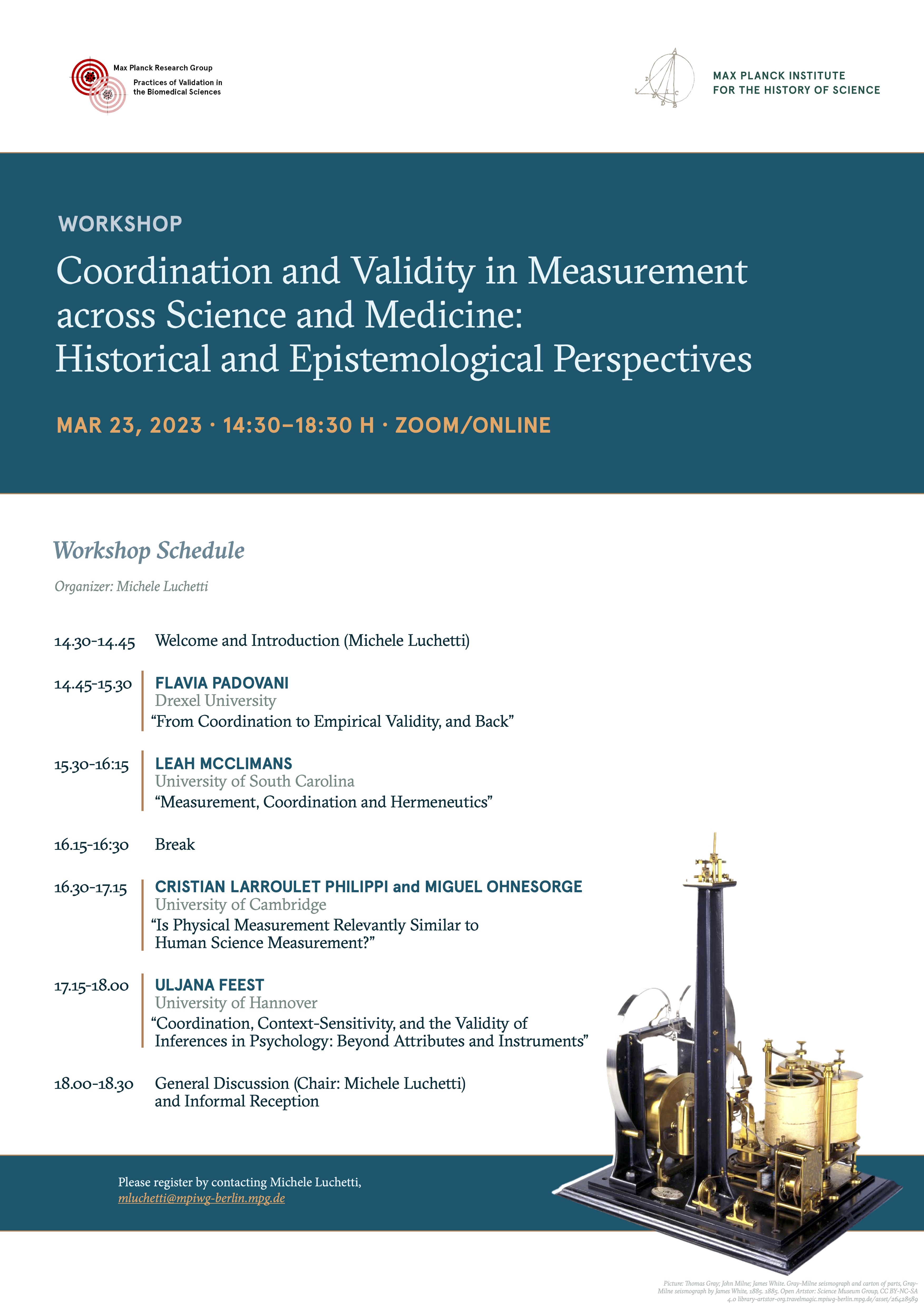

Mar 23, 2023

Coordination and Validity in Measurement across Science and Medicine: Historical and Epistemological Perspectives

- 14:00 to 18:45

- Workshop

- Max Planck Research Group (Biomedical Sciences)

- Several Speakers

- Uljana Feest

- Cristian Larroulet Philippi

- Leah McClimans

- Miguel Ohnesorge

- Flavia Padovani

A central problem for successful evidential uses of measurement has recently been addressed by noticing how the answers to the questions, “What counts as a measurement of X?” and “What is X?” often seem to presuppose one another, particularly when a theoretical understanding of the quantity of interest is weak (cf. van Fraassen 2008). In continuity with a similar concern raised in the early days of philosophy of science (Mach 1896, Reichenbach 1920; cf. Padovani 2015), recent literature in philosophy of measurement has discussed this circularity risk as the problem of coordination (e.g., van Fraassen 2008, McClimans 2013, Luchetti 2020, Ohnesorge 2022, Tal 2013) or the problem of nomic measurement (e.g., Chang 2004, Cartwright and Bradburn 2011). This problem, although cashed out in slightly different forms, has also been central to discussions of validity – particularly of measurement and construct validity – in psychometrics, education, and other human and health sciences (e.g., Cronbach and Meehl 1955, Borsboom 2005, Alexandrova and Haybron 2016, Alexandrova 2017, Feest 2020, Larroulet Philippi 2020). While virtually dealing with the same problem, these two branches of the literature have scarcely interacted with each other from a philosophical and methodological point of view. This workshop will bring together philosophers working in the HPS tradition that have dealt with either or both the problem of coordination and measurement/construct validity in science and medicine to address, among others, the following questions: Do coordination and measurement/construct validity concern the very same problem? If so, what disciplinary specificities have determined the emergence of two different labels and philosophicalmethodological discussions? How can these two inform one another, particularly to advance our understanding of validity in the human and health sciences? Can the tradition discussing coordination, more concerned with the physical sciences, provide relevant input for addressing issues of measurement/construct validity in, e.g., psychometrics? If not, why is that so?

-

Workshop Report by Simon Brausch & Michele Luchetti

In the last two decades, a renewed interest in measurement as a complex epistemic practice has gained momentum. Historians, philosophers, and sociologists of science have focused on several foundational metrological issues to understand the institutional and conceptual development of measurement within and across scientific disciplines. It is within this broader context that the Max Planck Research Group “Practices of Validation in the Biomedical Sciences,” based at the Max Planck Institute for the History of Science in Berlin, hosted the online workshop “Coordination and Validity in Measurement across Science and Medicine: Historical and Epistemological Perspectives” on March 23, 2023. The workshop brought together scholars at different stages of their career who share an interest in two notions central to the historical and philosophical study of measurement methodology, that is, coordination and validity.

The notion of coordination emerged from a specific epistemological discussion focusing on major developments in physical theory at the turn of the twentieth century. However, it has recently been revived by philosophers focusing on measurement in sciences also beyond physics, including biomedicine, and even by measurement methodologists themselves, particularly in psychology. In this debate, coordination is understood as the process by which a solution to a specific measurement problem is achieved, viz., the problem of how scientists justify their belief that certain measurement procedures identify the quantity of interest in the absence of independent methods to assess them.

Validity and the related notion of validation as, respectively, an evaluative category and an evaluative practice are the main focus of the research group hosting the workshop. Debates on validity originated in the 1920s from the need of assessing quantitative tools in psychology and education, and they rapidly extended to a variety of epistemic contexts across disciplines. However, validity and validation remain rather elusive notions, certainly because their meaning and application branched out along several disciplinary lineages, but also because of their broadly metamorphic, contextual, often value-laden character. Still, a core sense of validity seems to persist across time, understood as the extent to which an assessment tool, such as a measurement tool, captures what it really is intended to capture.

Despite their different disciplinary origins and criteria of application, both coordination and validity are concerned at their core with the risk of epistemic circularity in measurement. This risk arises when there is insufficient or inadequate justification for claiming that two measurement procedures are measuring the same attribute, or for claiming that a certain procedure is indeed measuring the attribute of interest. The centrality of this issue for the practice of measurement and for the universally recognized role of measurement as a key source of empirical evidence motivates the search of a tighter integration between discussions of coordination and validity across disciplines pursued by this workshop. The workshop featured four talks from invited speakers and a final open discussion. In what follows, we will address some of the main points emerged from these contributions and we will briefly outline their relevance for further research.

The workshop opened with Flavia Padovani’s talk “From Coordination to Empirical Validity, and Back.” Padovani’s contribution focused on Hans Reichenbach’s conception of coordination and its relevance for contemporary philosophy and science. According to Padovani, Reichenbach’s neo-Kantian roots are central to his early view of physical coordination as a solution to the risk of circularity intrinsic to the use of abstract mathematical symbols to express physical laws. In Reichenbach’s view, physical judgements necessarily resort to approximations and are subject to the occurrence of errors, imprecisions, and uncertainty. In order to express physical laws in precise mathematical terms, we must presuppose certain principles, such as the principle of probability, that enable us to account for this incompleteness while expressing physical facts in mathematical language, thus endowing the mathematical expressions with empirical validity. In other words, these principles provide the coordination between the level of concrete phenomena and the level of mathematical symbols. Reichenbach stressed the notion of mutuality of coordination, meaning that coordination cannot be achieved simply by choosing axiomatic principles that establish the referential relationship between abstract concepts and concrete phenomena. In fact, our perceptual experience is the ultimate source of empirical confirmation because only through experience we can establish whether the chosen axioms lead to a consistent form of coordination. Therefore, the level of perception ultimately allows for the choice of (measurement) values that lead to a univocal form of coordination, that is, a univocal mapping between measured values and concrete physical states. Padovani’s talk encouraged us to understand coordination as a process that responds to a problem of translation between concrete physical states and abstract mathematical symbols, as well as to a problem of validity, because without this translatability the mathematical equations of physics would not be empirically valid. This point highlights a connection between coordination and validity in their common relevance to the risk of epistemic circularity in measurement. In addition, the relevance of Kant’s influence on Reichenbach’s approach to coordination may be taken as inspiration for further work to address current issues related to validity by resorting to conceptual resources adapted from Kantian or neo-Kantian epistemological approaches.

The second contribution, “Measurement, Coordination and Hermeneutics” by Leah McClimans, also focused on the problem of circularity in measurement and the notion of coordination, but from a quite different perspective. McClimans characterizes the problem of circularity in measurement in terms of a hermeneutic circle. If we want to know whether a measuring instrument does a good job of capturing the phenomena of interest, it seems that we need to know already a great deal about these phenomena to assess the quality of our instrument. Extant approaches to this problem in philosophy of science, such as Hasok Chang’s coherentist proposal, aim at the stabilization of the hermeneutic circle over time. This stabilization usually entails the standardization of the phenomena of interest and of the measurement instruments.

However, it often occurs that standards get revised, some phenomena cannot be standardized, some measures are never stabilized and questions of coordination are still present even when successful measurement seems to be achieved. These considerations, together with the rejection of an assumption-free ideal of scientific knowledge, lead McClimans to draw inspiration from Gadamer’s philosophical hermeneutics and regard hermeneutic dialogue, rather than standardization, as a more realistic and fruitful perspective on how progress in measurement is achieved. The intriguing take-home message of the talk can be summed up as follows: Let us embrace the hermeneutic circle and what comes along the process of improving our understanding of it, rather than doggedly focusing on achieving, often preemptively, a solution to it. McClimans perspective also brought about interesting considerations about pluralism as a productive alternative to univocal coordination based on standardization as an overarching goal of measurement methodology. While we consider McClimans’ argument for the epistemic benefits of the hermeneutic circle and pluralism as a promising perspective on both the coordination and the validity debates that has so far been understated, we believe that further investigation is required to examine how well these benefits scale in comparison to the epistemic costs of abandoning the goal of univocal coordination or validity in scientific measurement practices.

A joint talk by Cristian Larroulet Philippi and Miguel Ohnesorge, titled “Is Physical Measurement Relevantly Similar to Human Science Measurement?” provided the third contribution to the workshop. The talk was motivated by debates surrounding the skepticism over the possibility of quantitative measurement in the human sciences. In particular, critics have claimed that the difficulties of implementing adequate experimental control of confounding factors in the measurement of attributes in the human sciences constitutes a major obstacle for successful quantification, in disanalogy with the physical sciences. Larroulet Philippi and Ohnesorge presented a detailed case study from seismometry, in which successful measurement was not achieved through experimental control but, rather, via the medium of theory. In contrast with physical attributes traditionally object of philosophical focus, the attributes measured by seismometry share several features with attributes in the human sciences, since they are multi-dimensional, causally complex and value-laden. For this reason, the authors have argued, this example can be taken as a better term of comparison when evaluating measurement practices and standards across the physical and human sciences. The authors raised an additional point, suggesting that the notion of coordination seems to preserve a certain semantic openness that is required to account for the changes in the theoretical definition of the measurand along the process of refining measurement standards, while debates on validity generally fail to illuminate the importance of this aspect. While some workshop participants considered this point to be controversial, it stimulated a fertile Q&A. The discussion addressed the similarity of the notions of coordination and construct validity, as well as the complications intrinsic to tracing a single, linear genealogy of the validity concept. This Q&A was particularly fruitful in that the workshop participants put together their insights from diverse scholarly backgrounds to arrive collectively at a more nuanced understanding of both coordination and validity, as well as to find a common ground for further exchange between the history and philosophy of these two notions, that have so far developed in relative isolation.

The final talk by Uljana Feest was titled “Coordination, Context-Sensitivity, and the Validity of Inferences in Psychology: Beyond Attributes and Instruments.” Feest introduced how the problem of epistemic circularity in measurement, also labelled problem of nomic measurement, has been recently discussed by methodologists of psychology by referring to the notion of coordination. As Feest highlighted, the traditional Campbellian multitrait-multimethod approach to the validation of psychological measures, focused on sorting the variability due to the attribute from the variability due to the measuring instrument, overlooks the potential context-sensitivity of both the instrument and the attribute. On the one hand, an instrument can be particularly reliable for specific contexts or particularly prone to confounders in others. On the other, attributes might only be triggered in specific contexts, or it can be expressed differently in different contexts, thus requiring different instruments depending on context. According to Feest, the problem of context-sensitivity gives rise to an important “new nomic problem,” since the context-sensitivity of psychological attributes and of the instruments used to measure them plausibly prevents us from not only a) being able to generate a-contextual data, but also b) from finding a-contextual ways of delineating the shape and scope of a psychological kind and, consequently, the extension and the internal structure of psychological constructs.

In the final open discussion, one major point of debate concerned how social and political considerations could be further developed starting from the contributions of the speakers. Indeed, this observation was partly reflecting on the absence of social scientists from the panel, composed by philosophers and historians of science, but it certainly prompts further crucial questions concerning the notions of coordination and validity, as well as their relationship. How did social and political influences shape the debates on coordination and validity, in addition to their different disciplinary origin? What would a social-epistemological perspective add to the understanding of the relationship between coordination and validity? These open questions certainly indicate that scholars focusing on these topics have much more to share and investigate.

Simon Brausch & Michele Luchetti

Contact and Registration

Please register by contacting Michele Luchetti mluchetti@mpiwg-berlin.mpg.de